Technically advanced aircraft (TAA)—those with a primary flight display (PFD), multi-function display (MFD), and GPS—are sexy. Pilots are drawn to them like Pooh Bear to honey. Besides being eye-catching, TAA attempt to address some of the biggest problems in aviation by providing pilots with a lot of supplementary safety information. Moving maps designed to improve situational awareness make it almost impossible to get lost. Databases store more information at the touch of a button than a thirty pound chart case. We can display more weather information in the cockpit than was even available 30 years ago. Combine all that with an autopilot that provides time to gather and interpret, and you’d think we’d be a lot safer.

We’re not. Pilots of TAA kill themselves more often than steam gauge aviators—almost twice the rate, according to the NTSB. Technology advances address many of the leading causes of GA fatalities: loss of control, controlled flight into terrain, fuel problems, midair collisions and weather. So, where’s that improved safety?

Even more perplexing is that pilots flying TAA have higher ratings and more experience. A majority are instrument rated. Could our training be at fault?

We must recognize that advanced avionics are conceptually more complicated and require more time to learn and remain proficient. Focusing training on areas of automation that are prone to surprise will limit how often we are, well, surprised. Plus, we can’t let the vivid displays trick us into making bad decisions the way adult beverages did on our twenty-first birthday.

Cognitive Concept

To understand how and why we err during instrument flight, we need to briefly journey into the dark recesses of the mind. Our reality is, simply the brain’s model of what is input through the senses. Humans experience a relatively narrow portion of the world. Your dog smells and hears many times better than you. Nonetheless, our sensory input provides enough information to create a functional mental model of the world.

Ever get burned touching a hot pan? This is an aberration in our mental model caused by an inability to sense the heat of the pan before touching it. After a few blistered fingers, we learn to (usually) check if the pan is hot. Learning and experience improve our mental model. A key aspect of mental modeling is that the model will always be imperfect. But we can improve that model over time, and even approach real accuracy.

Hazardous IMC hijinks are caused by inaccuracies in our mental models. Air France 447 stalled from 39,000 feet, smashing into the Atlantic at over 10,000 feet per minute. If the pilots of the flight were put in a simulator and told to do a stall, they’d likely recover easily. The key to understanding the crash is realizing they never attempted to recover from a stall because they never recognized it. It was not a possibility based on their mental models. (Actually, they may have come to the correct conclusion too late to change the outcome.) More common accidents caused by model limitations or inaccuracies for general aviation pilots are graveyard spirals or spins.

Instrument training includes recovering from unusual attitudes. These drills are a breeze because pilots are prepared for them. Yet pilots fail to recover from graveyard spirals/spins in flight due to misunderstanding the difference between their mental model and reality. Reaching out to others is one of the few ways to identify a mental model failure. In this paraphrased ASRS report, a pilot was saved with the help of an alert controller and CFI.

After over correcting the localizer position, I noticed my airspeed was high and my artificial horizon showed excessive bank. I heard my marker beacon three times through the struggle. The controller asked if any CFIs were on the frequency. One was…

Afterwards, I talked to the CFI who explained the graveyard spiral. I thought unusual attitude recovery was easy—it was when I practiced under the hood.

This type of report is not uncommon with pilots who survive graveyard spins/spirals. Good resource management includes bringing more people into the loop when necessary to help identify mental failures.

Situational awareness is a broader mental model of reality. In addition to developing situational awareness, instrument pilots must also model instrument, navigation, and communication equipment. Steam gauge instruments are fairly easy to diagram and understand. We can draw a functional diagram of an airspeed indicator that describes the operation well enough to understand its errors. It’s simply impossible to do the same for an Air Data Computer (ADC) or Attitude Heading Reference System (AHRS).

Even FAA publications have trouble describing them in much detail.

Instrumentation in TAA incorporates a fundamental shift in understanding. Applying basic physics to mechanical constructs gives us a functional understanding. The same is not true with advanced avionics. Knowing pressure traducers, accelerometers and chips comprise black boxes doesn’t provide a clue about how they operate. Instead, the functions of the black boxes are hidden within cryptic lines of software.

A steam gauge generally works predictably through most failures. An airspeed indicator fails by not indicating correctly—block the pitot tube and the airspeed remains constant until you change altitude. The same is not true with airspeed on a PFD. Red Xes appear on the airspeed tape when the ADC recognizes a failure. You’ll notice EFIS failures quicker, but there’s no residual useful information to be had.

How a system fails provides information on why. Without an understanding of what generates a failure, a pilot can only guess about the source. The NTSB reports an incident where a pitot tube became blocked in flight. The system interpreted the loss of ram air pressure as an ADC failure. The airspeed, altitude, and vertical speed tapes were replaced with red Xes. Troubleshooting a single round-gauge airspeed failure versus a complete ADC failure is quite different. If the airspeed indicator just stopped working like a traditional plane, the pilot may have thought about turning on pitot heat. The NTSB asked the pilot in the above report about his use of the installed traditional standby instruments:

In a follow-up telephone interview, he [the pilot] stated that the event happened so quickly that he did not initially look at the backup airspeed indicator, but when he did, it was at zero. The pilot also stated that he did not look at either the backup altimeter or backup attitude indicator.

This pilot panicked—which is a normal response to suddenly realizing your mental model is wrong—and it resulted in him using the airframe parachute. Switching from traditional to advanced flight instruments takes a physical system and makes it abstract.

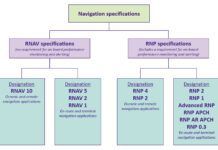

Navigation has always been an abstract task to some extent. The complexity increases in TAA. The black boxes that comprise the system aren’t just actual, but theoretical. People whose job it is to think about this stuff consider a “black box” to be any machine where the user can only see the inputs and outputs but not the process. A calculator is a black box; two plus two is input and four is displayed without requiring the user to have any understanding of the electronic processes inside. Indeed, the user need not have any knowledge of basic arithmetic either.

Advanced avionics are largely black boxes. Differences between mental and actual modeling of avionics modes is called automation confusion or automation surprise. Since mental models are never perfect, automation surprises are inevitable. Training can help minimize the potential for automation confusion and create better mental models.

Training

The FAA recognized TAA challenges and developed the FAA-Industry Training Standards (FITS) in 2003, to create scenario based training (SBT) that is now common. SBT aims to eliminate the gap between practice and performance through experience. Entering a graveyard spiral during training is better practice than recovering from an unusual attitude. These experiences expand a person’s mental model before getting a burned hand. But, FITS failed to address the advanced technology of TAA.

My crazy uncle tries to convince me the world is run by five secretive banking families. While I don’t subscribe to his delusions, there is an organization that dictates practically everything about the avionics in our aircraft. The Radio Technical Commission for Aeronautics (RTCA) was founded in 1935 and “provided the foundation for virtually every modern technical advance in aviation,” in their words. The organization, comprised of the FAA and industry, creates all the avionics design standards.

Understanding the standards would take some of the black out of the boxes. Just like the FARs are the foundation upon which pilots operate, the RTCA standards are the underpinnings of black box design. To learn about the minimum performance standards for AHARS, you can check out DO-334, but it’ll cost you $150 and require fluency in engineer-speak, which isn’t realistic for most of us.

Professors, with access to free undergraduate labor, diagram mode functions and apply mathematical analysis to find areas ripe for automation surprise. They found that surprise is often caused by indirect mode changes, multiple functions for the same button, and poor feedback on what the automation is doing.

An example of an indirect mode change is an HSI that changes from GPS to LOC data automatically. This is also an example of poor feedback because the change occurs without any warning. When flying with the autopilot, this can lead to some real surprises as the autopilot tries to adjust to the new input and error signals. Autopilots are also a source of problems from one button performing multiple functions, where that one button might cycle through multiple modes with successive pushes.

These are just a couple of examples of areas that cause automation surprise. Identifying, experiencing and training for them will prevent some unpleasant automation curveballs.

It is impossible to identify all of the dark corners in automation, partially because these areas are defined by the pilot’s current mental model. Pilots need a game plan for when automation doesn’t behave. Instead of trying to figure out why—it is easy to get sucked in—give the automation a time out and take control. Remember: aviate, navigate, automate, communicate (…or something like that).

Risk Compensation

Let’s do a little thought experiment. How would you drive without wearing a seatbelt? It probably seems dangerous and risky. You would likely drive more cautiously to offset some of the increased risk. While this experiment involved taking away a safety device, the opposite is also true. When safety devices are added, people take more risks offsetting some of the safety gains. Human factor geeks call this risk compensation.

These same geeks realize that safety is always balanced against production. In our case, production is utility. Tech improvements result in increased utility. It is important to realize that these improvements can either result in greater use at the same level of safety or the original utility with an increased level of safety. TAA are sold as having greater utility than traditional airplanes. Questioning whether you would make a trip using an old six pack clarifies how you use technology.

A study by NASA found that GA pilots preferred TAA because the pilots perceived that the TAA reduced the workload and improved situational awareness, which would lead to increased safety. The pitfalls of advanced avionics were acknowledged, but mostly as applying to “the other guy.” Our perceptions are wrong.

Like Pablo Escobar’s girlfriend, TAA are sexy and dangerous. The fatal crash rate of TAA is almost twice that of aircraft with steam gauges, but it doesn’t have to be. Advanced avionics add complexity and require more time to maintain proficiency. Paying attention to inadvertent mode changes, multiple functions for a button, and areas of low feedback can identify areas prone for automation surprise. Plan for automation misbehavior and have a strategy ready so you don’t get sucked into the commotion. Finally, a self-assessment can identify risk compensation.

TAA are sexy mistresses, full of lies and mysteries that require proper precautions and understanding for a successful relationship.

Jordan Miller, ATP, CFII, MEI flies for a major U.S. airline. He has actively taught under Parts 61, 135 and 141.

Studying GA in any detail is a challenge. There is a dearth of reliable information about how GA airplanes are used. The FAA relies on self-reported information on the General Aviation and Part 135 Activity Survey, a system prone to error. One manner of examining GA is comparing like conditions. In the late 1990s and early 2000s the FAA initiated a pilot project called Capstone to test technologies now associated with NextGen. Analysis of the project showed that the installation of a GPS, MFD, and ADS-B, could reduce Part 135 commercial accidents in Alaska by 38 percent. While not all of the gains are applicable to GA operations elsewhere, reducing the frequency of controlled flight into terrain (CFIT) is.

The project used a rudimentary terrain database only effective for enroute use. Research showed that equipping aircraft with Capstone avionics avoided 44 percent of all preventable CFIT accidents and an estimated 90 percent of preventable enroute accidents. As the second leading cause of fatal GA accidents, a 44 percent reduction in CFIT accidents should have a significant impact on rates.

Most people would think a big red blob on the MFD course line would cause pilots to climb or change direction. Armchair pilots would argue that all CFIT accidents should have been eliminated. They weren’t, due to training effectiveness and risk compensation. Training effectiveness is how well training is implemented by pilots. The fact is that knowledge transfer from training is not perfect and mistakes will always be made—like accidently turning off the terrain information or forgetting to turn it on all together. Having information available doesn’t result in complete elimination of a hazard. —JM